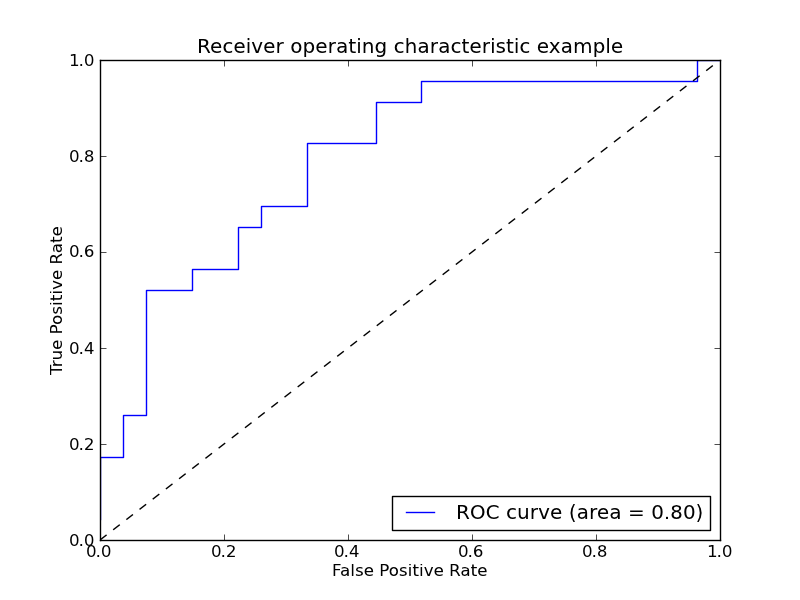

Receiver operating characteristic (ROC)¶

Example of Receiver operating characteristic (ROC) metric to evaluate the quality of the output of a classifier.

Script output:

Area under the ROC curve : 0.796296

Python source code: plot_roc.py

print __doc__

import numpy as np

import pylab as pl

from sklearn import svm, datasets

from sklearn.utils import shuffle

from sklearn.metrics import roc_curve, auc

random_state = np.random.RandomState(0)

# Import some data to play with

iris = datasets.load_iris()

X = iris.data

y = iris.target

# Make it a binary classification problem by removing the third class

X, y = X[y != 2], y[y != 2]

n_samples, n_features = X.shape

# Add noisy features to make the problem harder

X = np.c_[X, random_state.randn(n_samples, 200 * n_features)]

# shuffle and split training and test sets

X, y = shuffle(X, y, random_state=random_state)

half = int(n_samples / 2)

X_train, X_test = X[:half], X[half:]

y_train, y_test = y[:half], y[half:]

# Run classifier

classifier = svm.SVC(kernel='linear', probability=True)

probas_ = classifier.fit(X_train, y_train).predict_proba(X_test)

# Compute ROC curve and area the curve

fpr, tpr, thresholds = roc_curve(y_test, probas_[:, 1])

roc_auc = auc(fpr, tpr)

print "Area under the ROC curve : %f" % roc_auc

# Plot ROC curve

pl.clf()

pl.plot(fpr, tpr, label='ROC curve (area = %0.2f)' % roc_auc)

pl.plot([0, 1], [0, 1], 'k--')

pl.xlim([0.0, 1.0])

pl.ylim([0.0, 1.0])

pl.xlabel('False Positive Rate')

pl.ylabel('True Positive Rate')

pl.title('Receiver operating characteristic example')

pl.legend(loc="lower right")

pl.show()