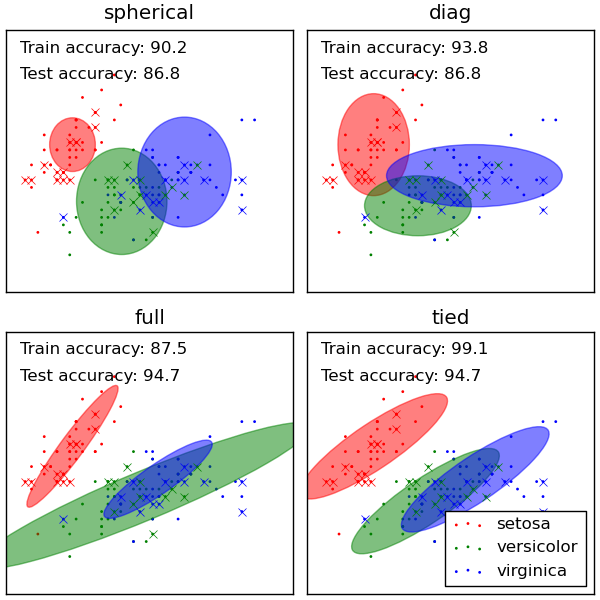

4.1. Gaussian mixture models¶

scikits.learn.mixture is a package which enables to create Mixture Models (diagonal, spherical, tied and full covariance matrices supported), to sample them, and to estimate them from data using Expectation Maximization algorithm. It can also draw confidence ellipsoides for multivariate models, and compute the Bayesian Information Criterion to assess the number of clusters in the data.

For the moment, only Gaussian Mixture Models (GMM) are implemented. These are a class of probabilistic models describing the data as drawn from a mixture of Gaussian probability distributions. The challenge that is GMM tackles is to learn the parameters of these Gaussians from the data.

4.1.1. GMM classifier¶

The GMM object implements a GMM.fit method to learn a Gaussian Mixture Models from train data. Given test data, it can assign to each sample the class of the Gaussian it mostly probably belong to using the GMM.predict method.

Examples:

- See GMM classification for an example of using a GMM as a classifier on the iris dataset.

- See Gaussian Mixture Model Ellipsoids for an example on plotting the confidence ellipsoids.

- See Density Estimation for a mixture of Gaussians for an example on plotting the density estimation.